Blending Diffusion Models

Assume two different concepts are given, can we blend them together with diffusion models?

This is the main research question that we tried to answer in our latest work that will be soon published. Short answer: yes, we can. Long answer: it’s a bit more complicated than that and there are many different ways to do it.

The paper is currently in press and will be presented at the end of this month, this post is meant to give a brief overview of the work that we have done and the results that we have obtained. I will therefore not go into methodological details, if you are interested feel free to contact me or refer to the paper once it is published. I will update this post with the link to the paper as soon as it is publicly available.

Four different methods are explored in our research, one of which, to the best of our knowledge, is novel. The methods are:

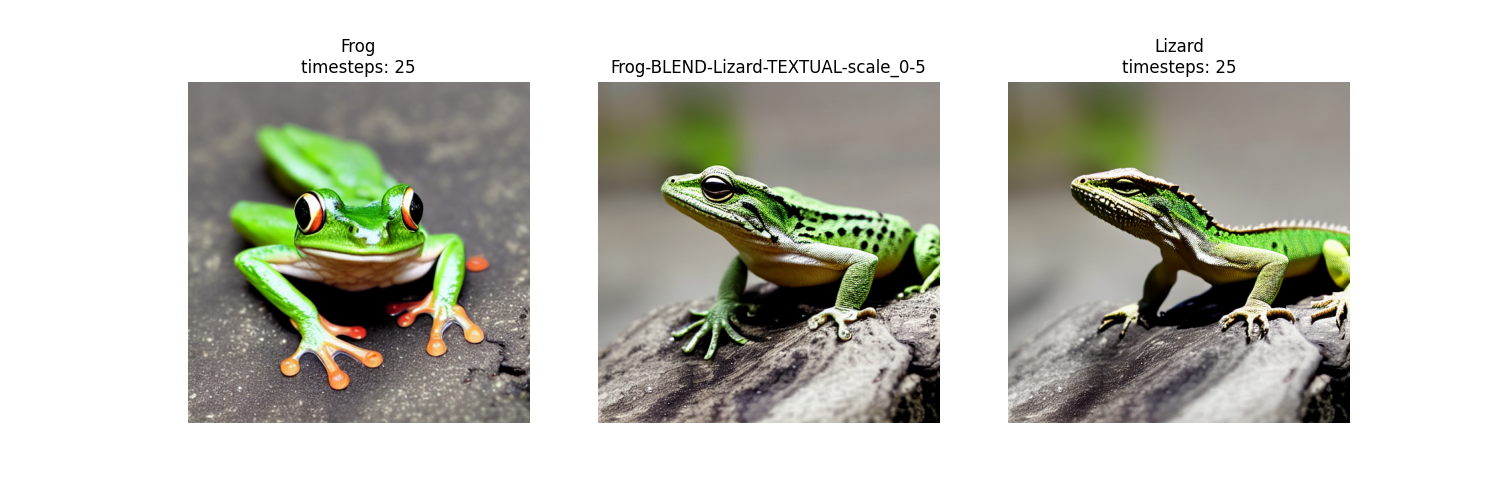

- TEXTUAL: the blend of two different concepts is the linear interpolation of their text embeddings.

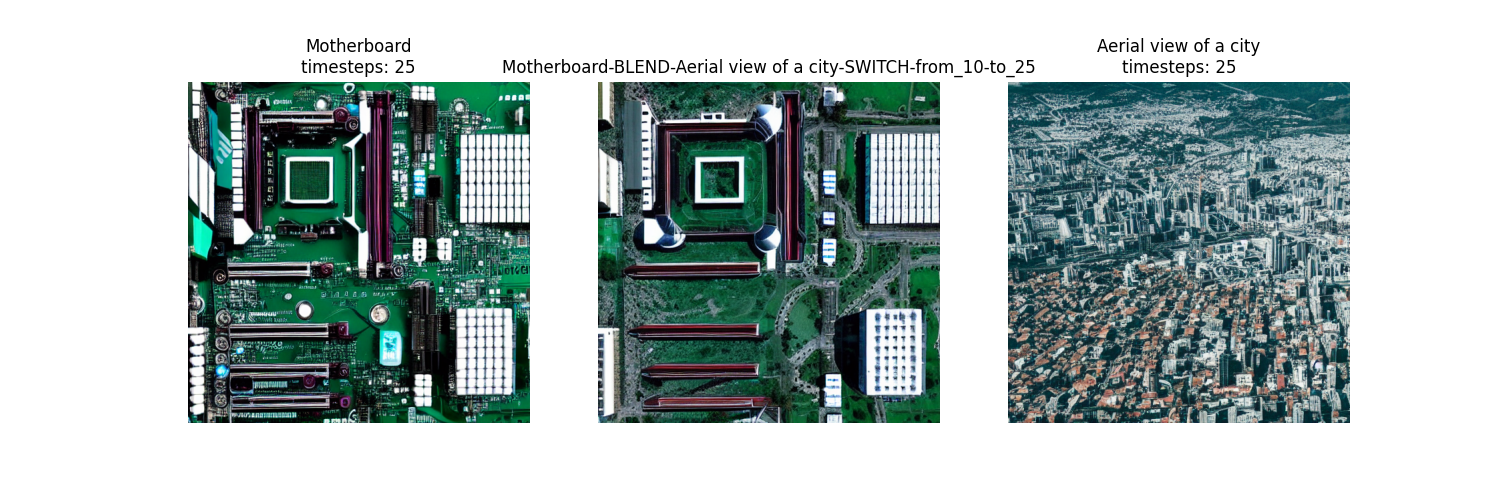

- SWITCH: starts the denoising process with one concept and then switches, at some predefined point, to the other concept.

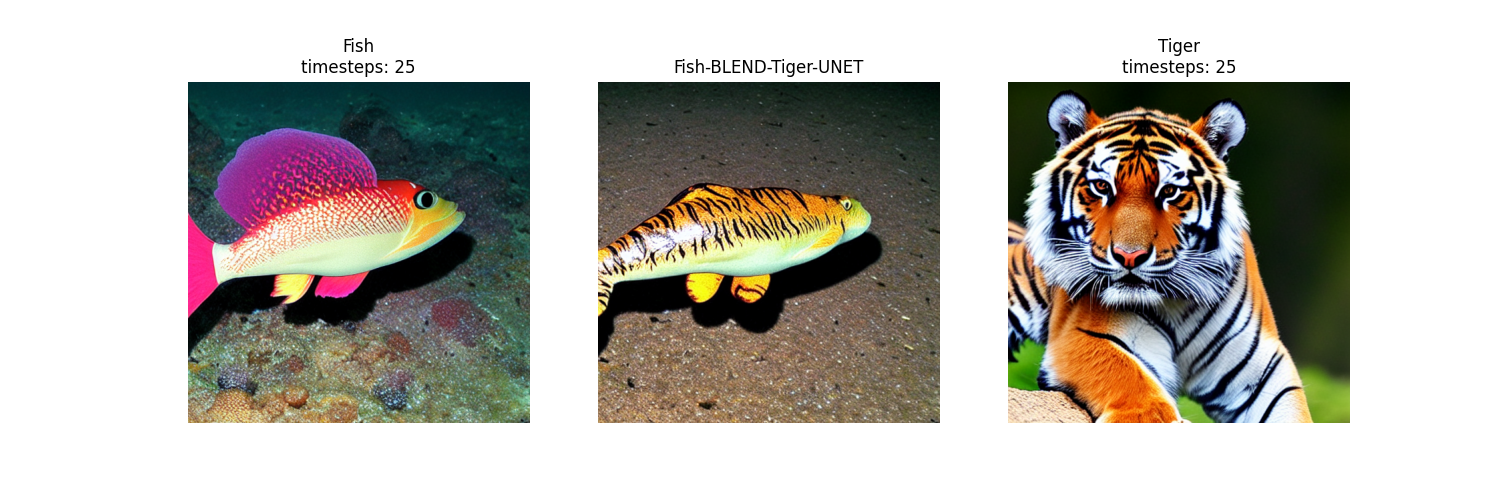

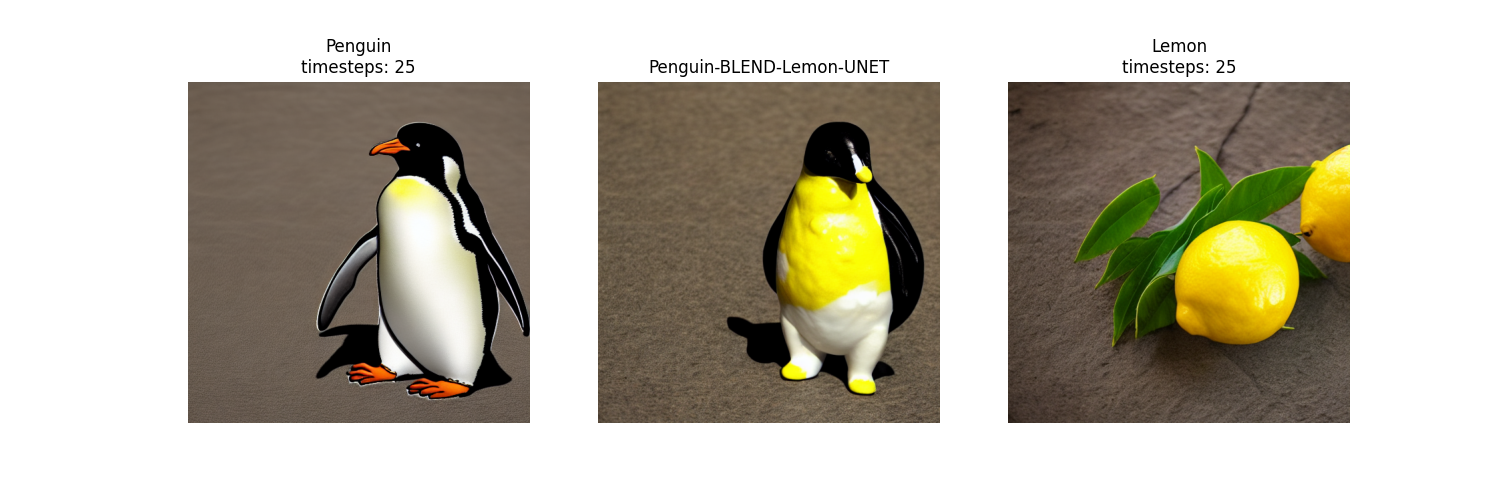

- UNET: at each diffusion step, encode in the U-Net the image latent using the first concept and then decode it using the second one.

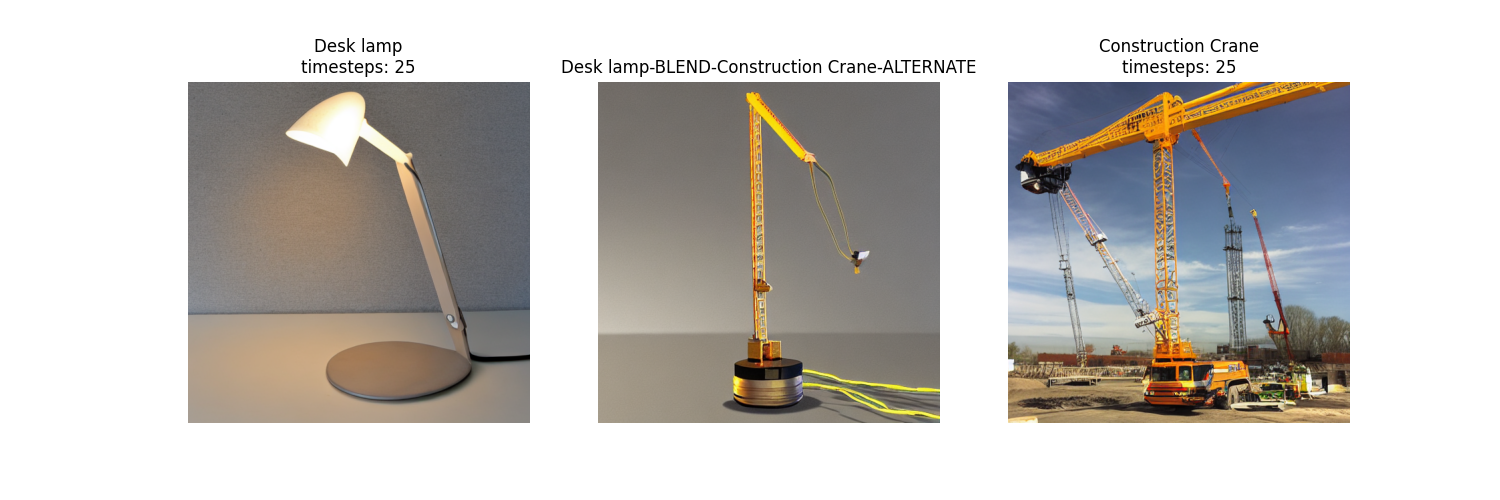

- ALTERNATE: uses the first concept for the even diffusion steps and the second one for the odd steps.

Once again, for a detailed explanation, implementation and comparison of this blending method, please refer to the paper.

Experimental setup

Each of the methods illustrated in the paper is implemented from scratch using the Diffusers library, the full implementation is available in my GitHub repository blending-diffusion-models

The pipelines are implemented with UNet, text-encoder, tokenizer, and VAEs weights from CompVis/stable-diffusion-v1-4. All of the samples shown here are generated using the same UniPCMultistepScheduler scheduler at 25 steps.

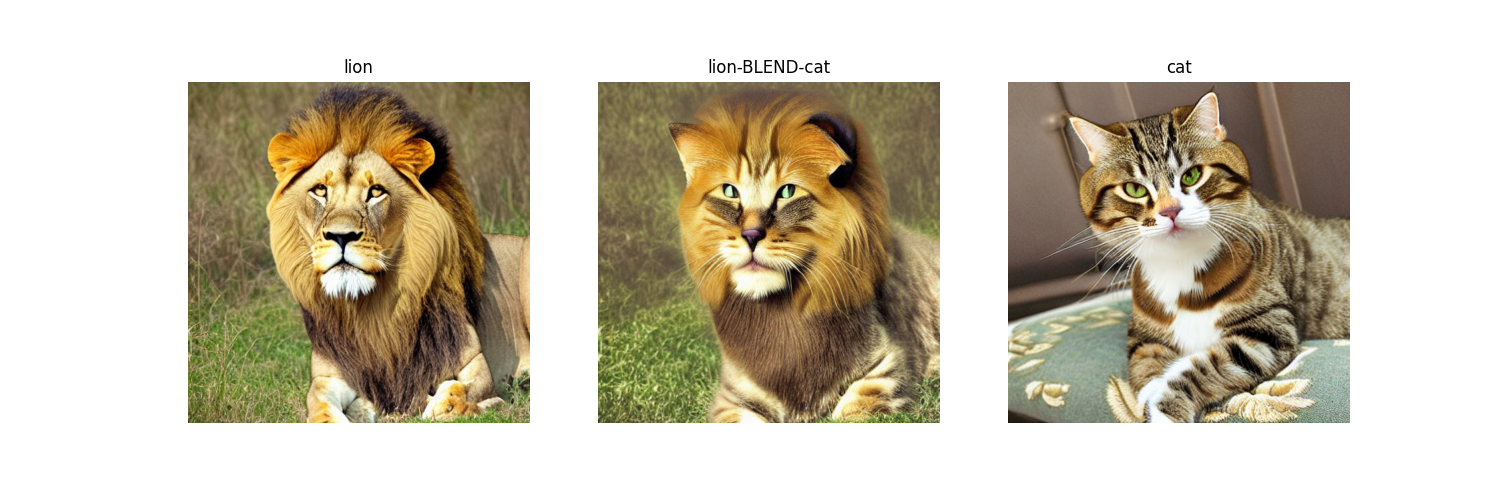

In the following figures, the image on the left is the first prompt, the image on the right is the second prompt, and the image in the middle is the blend of the two with the method indicated in the caption and title.

Conclusions

The results of this research show that diffusion models can blend different concepts, however, from our findings, there is no definitive method to do so. Each of them exhibits different behaviors and effects as results vary depending on the semantical distance between the two concepts, as well as the spatial similarity of the two images synthesized from the respective prompts.

This research is part of my Master’s thesis on understanding the spatial behavior of Diffusion Models. If you are interested in this topic and would like to collaborate feel free to contact me through any of the contacts at the bottom of this page. I will update the post with the link to the paper as soon as it is published. Stay tuned!